Use Agentic AI to generate smarter API tests. In minutes. Learn how >>

Software Integration Testing

Integration testing follows unit testing with the goal of validating the architectural design or high-level requirements. Integration testing can be done bottom-up and top-down with a combination of approaches likely in many software organizations.

Bottom-Up Integration

Testers take unit test cases and remove stubs or combine them with other code units that make up higher levels of functionality. These types of integrated test cases are used to validate high-level requirements.

Top-Down Integration

In this testing, the high-level modules or subsystems are tested first. Progressively, including the testing of lower-level modules (sub-subsystems) follows. This approach assumes significant subsystems are complete enough to include and test as a whole.

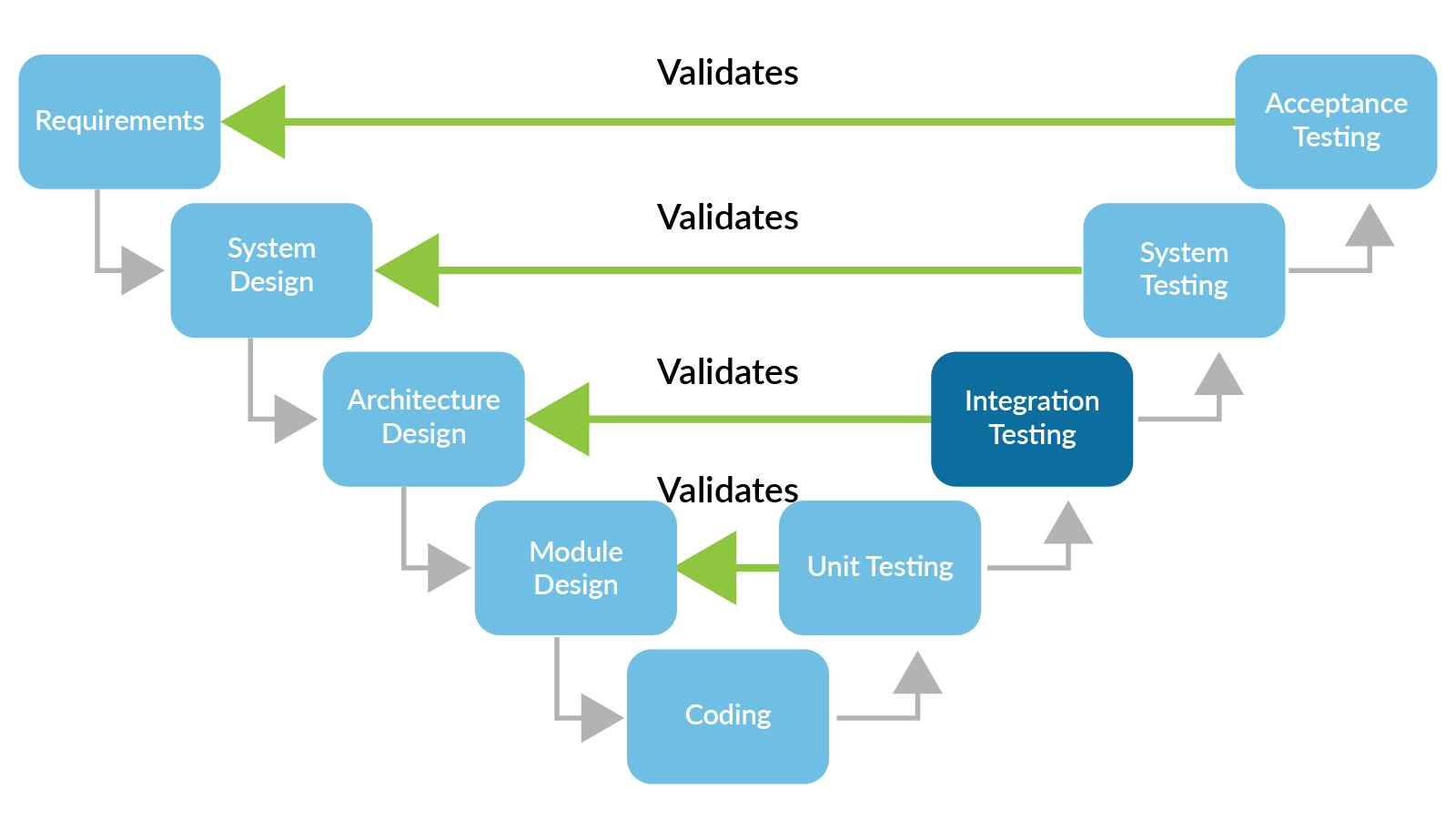

The V-model is good for illustrating the relationship between the stages of development and stages of validation. At each testing stage, more complete portions of the software are validated against the phase that defines it.

The V-model might imply a waterfall development method. However, there are ways to incorporate Agile, DevOps, and CI/CD into this type of product development while still being standards-compliant.

While the act of performing tests is considered software validation, it’s supported by a parallel verification process that involves the following activities to make sure teams are building the process and the product correctly:

Reviews

Walkthroughs

Analysis

Traceability

Test

Code coverage and more

The key role of verification is to ensure building delivered artifacts from the previous stage to specification in compliance with company and industry guidelines.

Integration and System Testing as Part of a Continuous Testing Process

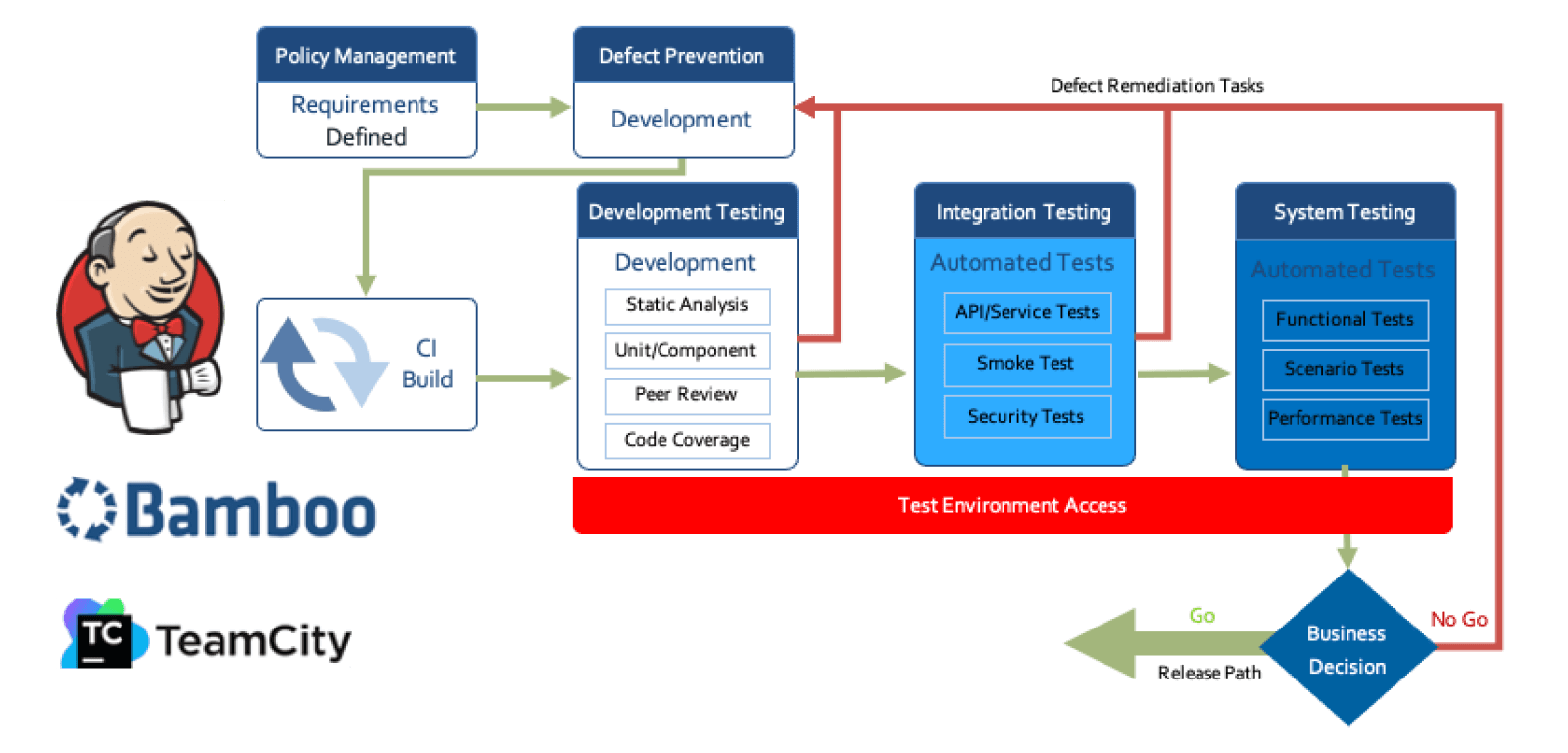

Performing some level of test automation is foundational for continuous testing. Many organizations start by automating manual integration and system testing (top-down) or unit testing (bottom-up).

To enable continuous testing, organizations need to focus on creating a scalable test automation practice that builds on a foundation of unit tests, which are isolated and faster to execute. Once unit testing is fully automated, the next step is integration testing and eventually system testing.

Continuous testing leverages automation and data derived from testing to provide a real-time, objective assessment of the risks associated with a system under development. Applied uniformly, it allows both business and technical managers to make better trade-off decisions between release scope, time, and quality.

Continuous testing isn’t just more automation. It’s a larger reassessment of software quality practices that are driven by an organization’s cost of quality and balanced for speed and agility. Even within the V-model used in safety-critical software development, continuous testing is still a viable approach, particularly during phases of testing, for example, during unit testing and integration testing.

The diagram below illustrates how different phases of testing are part of a continuous process that relies on a feedback loop of test results and analysis.

Parasoft Analysis and Reporting in Support of Integration and System Testing

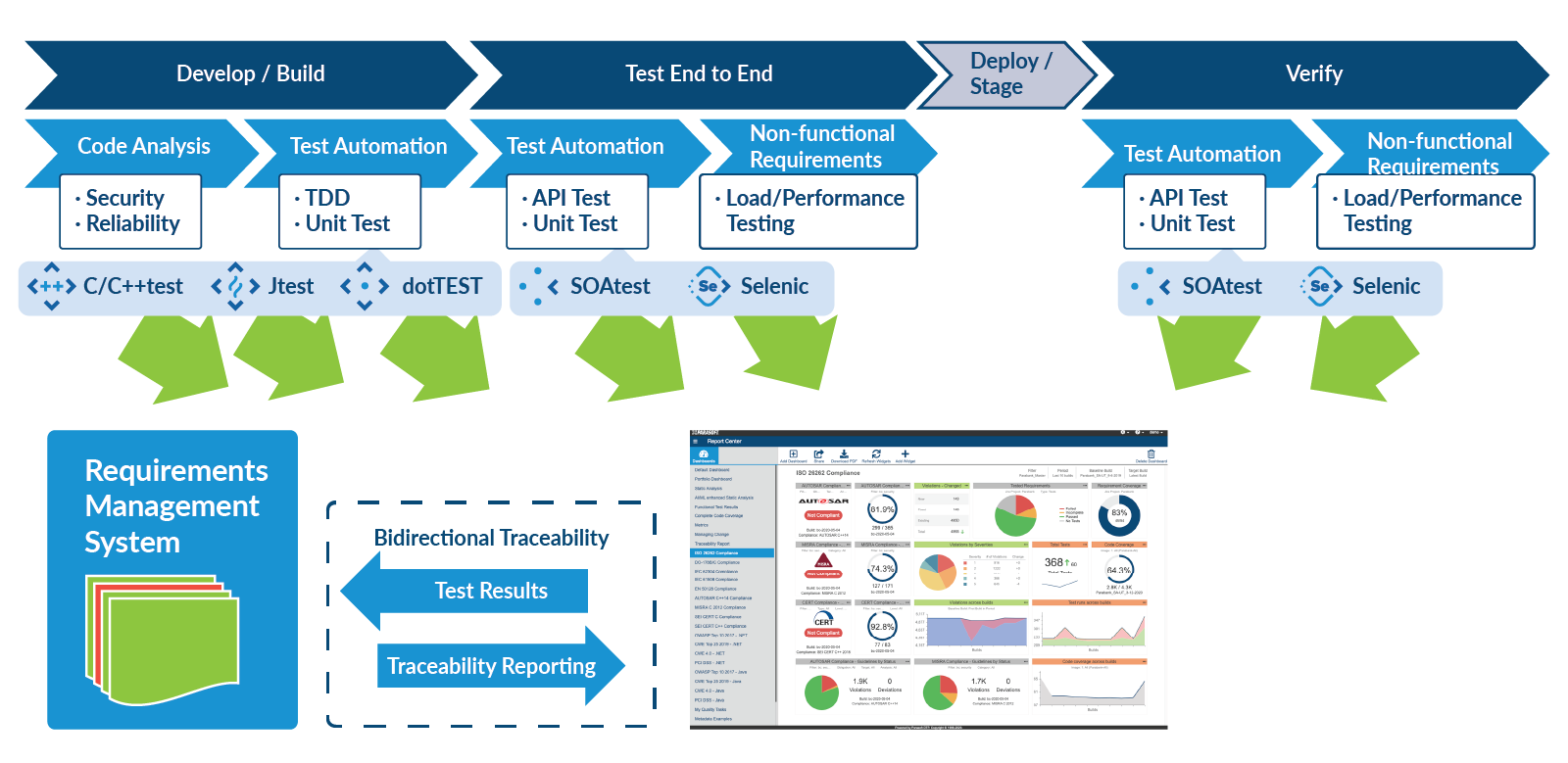

Parasoft test automation tools support the validation (actual testing activities) in terms of test automation and continuous testing. These tools also support the verification of these activities, which means supporting the process and standards requirements. A key aspect of safety-critical automotive software development is requirements traceability and code coverage.

Two Way Traceability

Requirements in safety-critical software are the key driver for product design and development. These requirements include functional safety, application requirements, and nonfunctional requirements that fully define the product. This reliance on documented requirements is a mixed blessing because poor requirements are one of the critical causes of safety incidents in software. In other words, the implementation wasn’t at fault, but poor or missing requirements were.

Automating Bidirectional Traceability

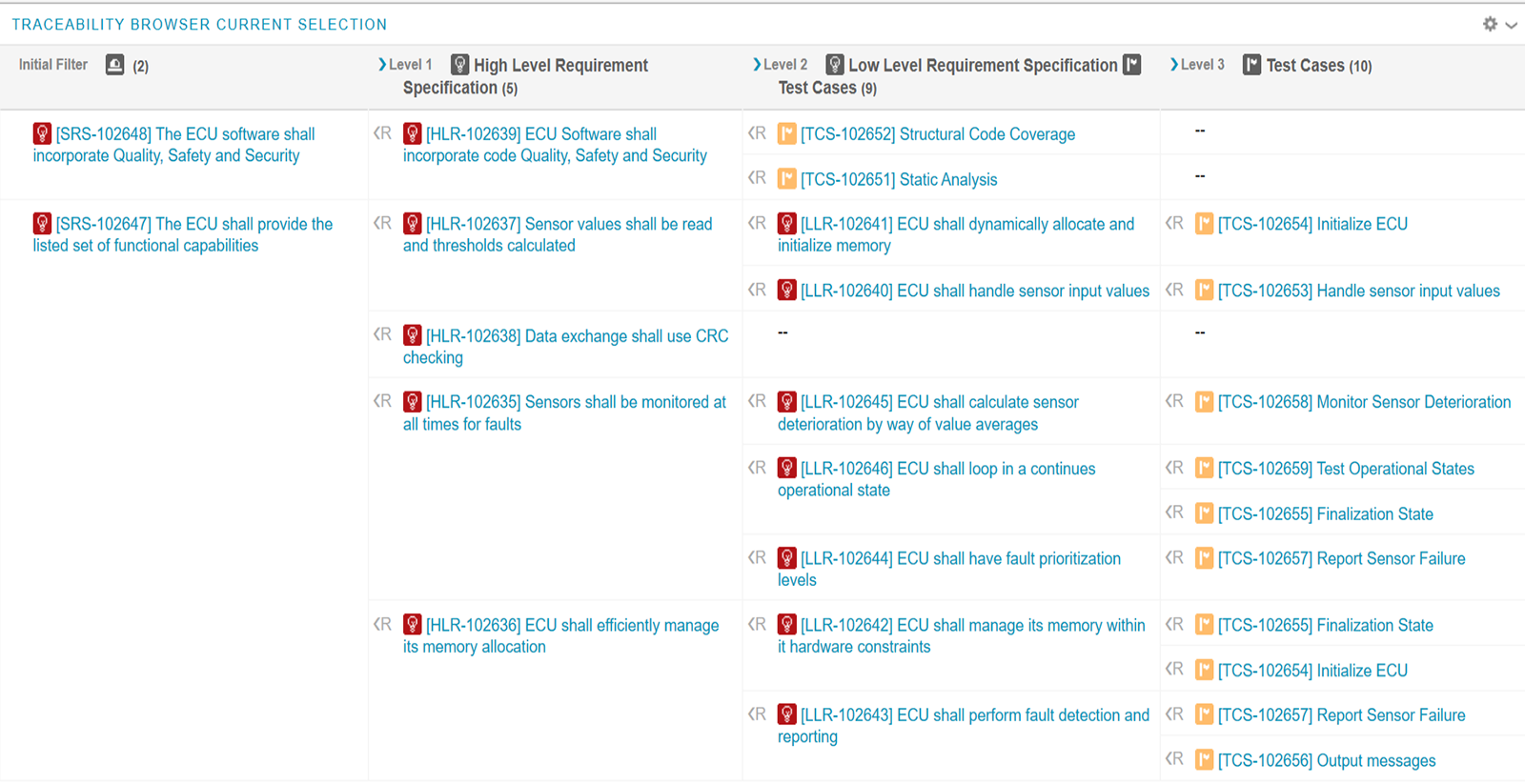

Maintaining traceability records on any sort of scale requires automation. Application lifecycle management tools include requirements management capabilities that are mature and tend to be the hub for traceability. Integrated software testing tools like Parasoft complete the verification and validation of requirements by providing an automated bidirectional traceability to the executable test case. This includes the pass or fail result and traces down to the source code that implements the requirement.

Parasoft integrates with market-leading requirements management tools or ALM systems such as IBM DOORS Next, PTC Codebeamer, Polarion from Siemens, Atlassian Jira, Jama Connect, Jira, and others. As shown in the image below, each of Parasoft’s test automation solutions, C/C++test, C/C++test CT, Jtest, dotTEST, SOAtest, and Selenic, used within the development life cycle, supports the association of tests with work items defined in these systems, such as requirements, defects, and test case/test runs. Traceability is managed through Parasoft DTP’s central reporting and analytics dashboard.

Parasoft DTP correlates the unique identifiers from the management system with:

- Static analysis findings

- Code coverage

- Results from unit, integration, and functional tests

Results are displayed within Parasoft DTP’s traceability reports and sent back to the requirements management system. They provide full bidirectional traceability and reporting as part of the system’s traceability matrix.

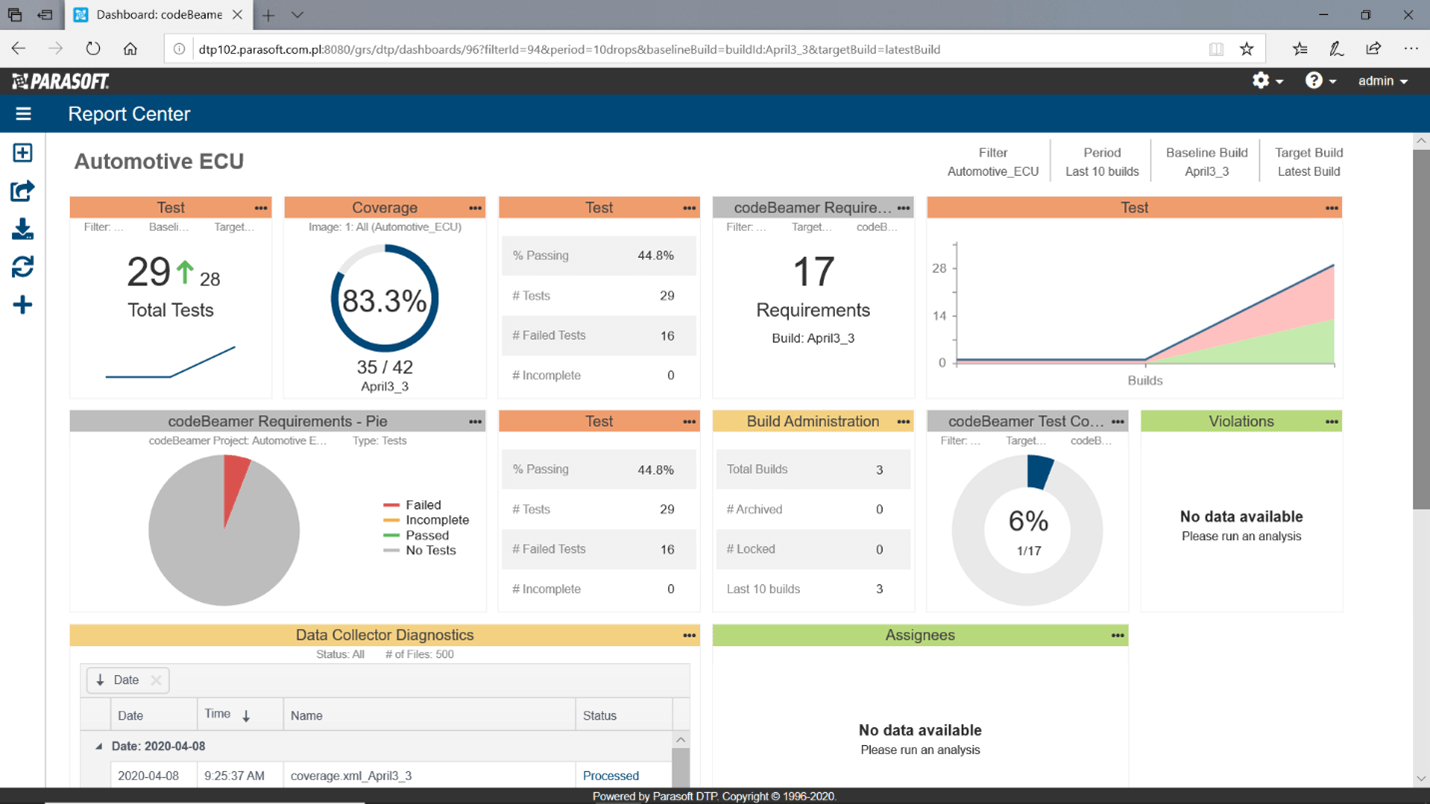

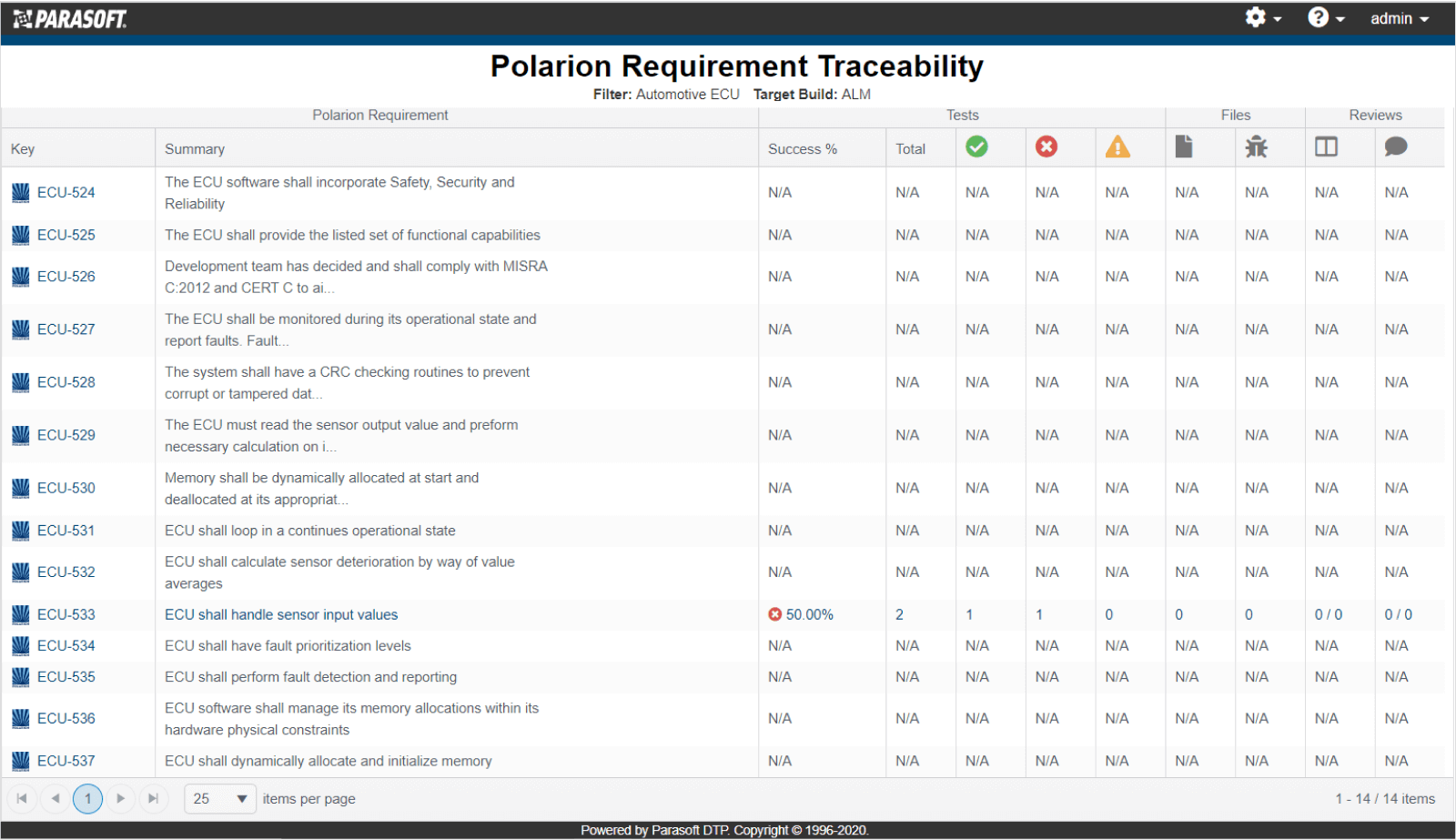

The traceability reporting in Parasoft DTP is highly customizable. The following image shows a requirements traceability matrix template for requirements authored in Polarion that trace to the test cases, static analysis findings, the source code files, and the manual code reviews.

Code Coverage

Code coverage expresses the degree to which the application’s source code is exercised by all testing practices, including unit, integration, and system testing — both automated and manual.

Collecting coverage data throughout the life cycle enables more accurate quality and coverage metrics, while exposing untested or under tested parts of the application. Depending on the safety integrity level (ASIL in ISO 26262), the depth and completeness of the code coverage will vary.

Application coverage can also help organizations focus testing efforts when time constraints limit their ability to run the full suite of manual regression tests. Capturing coverage data on the running system on its target hardware during integration and system testing completes code coverage from unit testing.

Benefits of Aggregate Code Coverage

Captured coverage data is leveraged as part of the continuous integration (CI) process, as well as part of the tester’s workflow. Parasoft DTP performs advanced analytics on code coverage from all tests, source code changes, static analysis results, and test results. The results help identify untested and undertested code and other high-risk areas in the software.

Analyzing code, executing tests, tracking coverage, and reporting the data in a dashboard or chart is a useful first step toward assessing risk, but teams must still dedicate significant time and resources to reading the tea leaves and hope that they’ve interpreted the data correctly.

Understanding the potential risks in the application requires advanced analytics processes that merge and correlate the data. This provides greater visibility into the true code coverage and helps identify testing gaps and overlapping tests. For example, what is the true coverage for the application under test when your tools report different coverage values for unit tests, automated functional tests, and manual tests?

The percentages cannot simply be added together because the tests overlap. This is a critical step for understanding the level of risk associated with the application under development.

Standards compliance automation reduces the overhead and complexity by automating the most repetitive and tedious processes. The tools can keep track of the project history and relating results against requirements, software components, tests, and recorded deviations.

Elevate your software testing with Parasoft solutions.

Explore the Chapters

- Introduction »

- 1. Overview »

- 2. Static Analysis »

- 3. MISRA »

- 4. AUTOSAR C++ 14 »

- 5. SEI/CERT »

- 6. CWE »

- 7. Unit Testing »

- 8. Regression Testing »

- 9. Software Integration Testing »

- 10. Software System Testing »

- 11. Structural Code Coverage »

- 12. Requirements Traceability Matrix »

- 13. Tool Qualification »

- 14. Reporting & Analytics »