Take a faster, smarter path to AI-driven C/C++ test automation. Discover how >>

Explore the Chapters

Software verification is inherently part of safety-critical software development. Testing, by way of execution, is a key way to demonstrate the implementation of requirements and delivery of quality software. Unit testing is the verification of low-level requirements. It ensures that each software unit does what it’s required to do within its expected quality of service requirements—safety, security, and reliability. Safety and security requirements instruct that software units don’t behave in unforeseen ways where the system is not susceptible to hijacking, data manipulation, theft, or corruption.

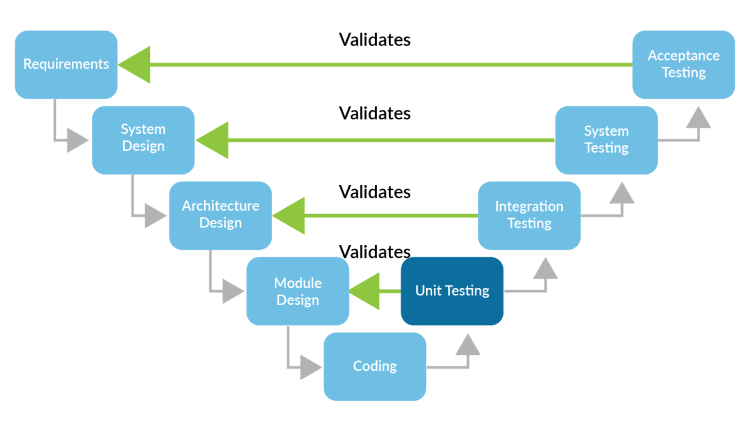

The V-model in software development showing the relationship between each phase and the validation inferred at each stage of testing.

In terms of the classic V-model process of development, unit test execution is a verification practice to ensure the module is designed correctly. DO-178C does not specifically mandate unit testing by name, but rather uses the terms high- and low-level requirements-based testing.

Low-level testing is commonly understood to be unit testing. In particular, the requirements for this type of requirements-based testing include the following.

DO-178C does not prescribe specific testing methodologies or tools but does emphasize the need for thorough testing to ensure the safety, security, and reliability of airborne software. Tests must be performed at all levels of the system along with is traceability between requirements, design, source code, and tests. In addition, test plans, test cases and results must be documented for certification.

These tests directly test functionality and quality of service as specified in each requirement. Test automation tools need to support bidirectional traceability of requirements to their tests and the requirements testing coverage reports to show compliance.

High-level requirements are derived from top-level system requirements. They decompose a system requirement into various high-level functional and nonfunctional requirements. This phase of the requirements decomposition helps in the architectural design of the system under development.

High-level requirements clarify and help define expected behavior as well as safety tolerances, security expectations, reliability, performance, portability, availability, scalability, and more. Each high-level requirement links up to the system requirement that it satisfies. In addition, high-level test cases are created and linked to each high-level requirement for the purpose of its verification and validation. This software requirements analysis process continues as each high-level requirement is further decomposed into low-level requirements.

Low-level requirements are software requirements derived from high-level requirements. They further decompose and refine the specification of the software’s behavior and quality of service.

These requirements drill down to another level of abstraction. They map to individual software units and are written in a way that facilitates software detail design and implementation. Traceability is established from each low-level requirement up to its high-level requirement and down to the low-level tests or unit test cases that verify and validate it.

Unit testing becomes about isolating the function, method, or procedure. It’s done by stubbing and mocking out dependencies and forcing specific paths of execution. Stubs take the place of the code in the unit that’s dependent on code outside of the unit. They also provide the developer or tester with the ability to manipulate the response or result so that the unit can be exercised in various ways and for various purposes, for example, to ensure that the unit performs reliably, is safe, and is also free from security vulnerabilities.

Interface tests ensure programming interfaces behave and perform as specified. Test tools need to create function stubs and data sources to emulate behavior of external components for automatic unit test execution.

Fault injection tests use unexpected inputs and introduce failures in the execution of code to examine failure handling or lack thereof. Test automation tools must support injection of fault conditions using function stubs and automatic unit test generation using a diverse set of preconditions, such as min, mid, max, and heuristic value testing.

These tests evaluate the amount of memory, file space, CPU execution, or other target hardware resources used by the application.

Clearly, every requirement drives, at minimum, a single unit test case. Although test automation tools don’t generate tests directly from requirements, they must support two-way traceability from requirements to code and requirements to tests, and maintain requirements, tests, and code coverage information.

Test cases must ensure that units behave in the same manner for a range of inputs, not just cherry-picked inputs for each unit. Test automation tools must support test case generation using data sources to efficiently use a wide range of input values. Parasoft C/C++test uses factory functions to prepare sets of input parameter values for automated unit test generation

Automatically generated test cases, like heuristic values and boundary values, employ data sources to use a wide range of input values in tests.

The error guessing method uses the function stubs mechanism to inject fault conditions into tested code flow analysis results and can be used to write additional tests.

Test automation provides large benefits to safety-critical embedded device software. Moving away from test suites that require a lot of manual intervention means that testing can be done quicker, easier, and more often.

Offloading this manual testing effort frees up time for better test coverage and other safety and quality objectives. An important requirement for automated test suite execution is being able to run these tests on both host and target environments.

Automating testing of embedded software is more challenging due to the complexity of initiating and observing tests on embedded targets, not to mention the limited access to target hardware that software teams have.

DO-178C requires testing software in a representative environment that reflects the actual deployment conditions. This includes testing on the target hardware or using a software environment that closely resembles the final target environment. This approach is required to ensure that the software operates correctly and reliably in the actual aircraft or airborne system.

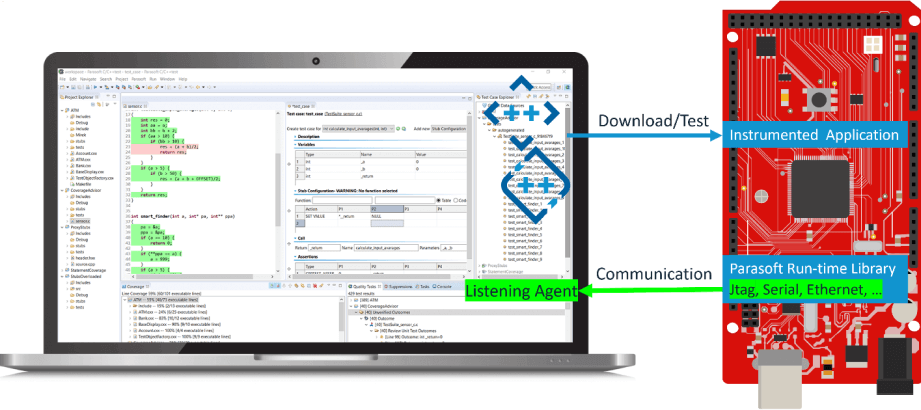

Software test automation is essential to make embedded testing workable on a continuous basis from host development system to target system. Testing embedded software is particularly time consuming. Automating the regression test suite provides considerable time and cost savings. In addition, C/C++test CT and C/C++test perform code coverage data collection from the target system, which is essential for validation and standards compliance.

Traceability between test cases, test results, source code, and requirements must be recorded and maintained. For those reasons, data collection is critical in test execution.

Parasoft C/C++test is offered with its test harness optimized to take minimal additional overhead for the binary foot print and provides it in the form of source code, where it can be customized if platform-specific modifications are required.

A high-level view of deploying, executing, and observing tests from host to target in Parasoft C/C++ testing solutions.

One huge benefit that the Parasoft C/C++test solution offers is its dedicated integrations with embedded IDEs and debuggers that make the process of executing test cases smooth and automated. Supported IDE environments include:

VS Code

Eclipse

Green Hills Multi

Wind River Workbench

IAR EW

ARM MDK

ARM DS-5

TI CCS

Visual Studio

Many more

Unit test automation tools universally support some sort of test framework, which provides the harness infrastructure to execute units in isolation while satisfying dependencies via stubs. Parasoft C/C++test is no exception. Part of its unit test capability is the automated generation of test harnesses and the executable components needed for host and target-based testing.

Test data generation and management is by far the biggest challenge in unit testing. Test cases are particularly important in safety-critical software development because they must ensure functional requirements and test for unpredictable behavior, security, and safety requirements. All while satisfying test coverage criteria.

Parasoft C/C++test automatically generates test cases like the popular CppUnit format. By default, C/C++test generates one test suite per source/header file. It can also be configured to generate one test suite per function or one test suite per source file.

Safe stub definitions are automatically generated to replace "dangerous" functions, which include system I/0 routines such as rmdir(), remove(), rename(), and so on. In addition, stubs can be automatically generated for missing function and variable definitions. User defined stubs can be added as needed.