See what API testing solution came out on top in the GigaOm Radar Report. Get your free analyst report >>

See what API testing solution came out on top in the GigaOm Radar Report. Get your free analyst report >>

An essential start to the journey of delivering high-quality, real-time embedded software systems is incorporating static analysis into the development workflow. With static analysis, software engineers can enhance the reliability, performance, and security of their software. At the same time, they can reduce the cost and time associated with identifying and fixing defects later in the development cycle.

Static analysis identifies errors in the code at an early stage in the development process, long before the software runs on the target hardware. This includes detecting syntax errors, logic errors, and potential runtime errors. Security is also paramount in embedded systems, especially those that are part of a critical infrastructure or the Internet of things (IoT) devices. Static analysis can uncover vulnerabilities such as buffer overflows, input validation flaws, and other security weaknesses that could be exploited by attackers.

In addition, most development teams will agree that unit testing is also essential to embedded software development despite the effort and costs. Unit testing helps developers truly understand the code they’re developing and provides a solid foundation to a verification and validation regimen needed to satisfy safety and security goals for a product. Building on this foundation of unit tests enables teams to accelerate agile development while mitigating risk of defects slipping into later stages of the pipeline.

Automating static analysis into the software development workflow provides significant benefits.

This ensures that issues are detected and addressed immediately, maintaining code quality throughout the development process, which is essential for large teams or projects with rapid development cycles. It will enforce coding standards across the entire code base, eliminating human error and bias in code reviews. This proactive approach will also ensure security by identifying potential vulnerabilities to be mitigated before they can be exploited.

While static analysis provides valuable insights into code quality and potential issues without execution, unit testing verifies the actual behavior and correctness of the code in execution. Using both techniques together ensures a more robust and reliable software development process, as they complement each other and cover a wider range of potential problems.

However, despite the benefits, development teams often struggle with performing sufficient unit testing. The constraints on the amount of testing are due to multiple factors such as the pressure to rapidly deliver increased functionality and the complexity and time-consuming nature of creating valuable unit tests.

Common reasons developers cite that limit the efficiency of unit testing as a core development practice include the following.

Unit test automation tools universally support some sort of test framework, which provides the harness infrastructure to execute units in isolation while satisfying dependencies via stubs. This includes the automated generation of test harnesses and the executable components needed for host and target-based testing.

Test data generation and management, however, is the biggest challenge in unit testing and test generation. Test cases need to cover a gamut of validation roles such as ensuring functional requirements, detecting unpredictable behavior, and assuring security, and safety requirements. All while satisfying test coverage criteria.

Automated test generation decreases the inefficiencies of unit testing by removing the difficulties with initialization, isolation, and managing dependencies. It also removes much of the manual coding required while helping to manage the test data needed to drive verification and validation.

Static code analysis provides a variety of metrics that help assess different aspects of embedded code safety, security, reliability, performance, and maintainability. They each offer valuable information about various aspects of the code’s health. One of these metrics is cyclomatic complexity, which measures the number of linearly independent paths through a program’s source code.

Higher complexity indicates higher potential for bugs and harder to read, maintain, and test. Other values that static analysis can provide that aren’t quality measures by themselves, but give a sense of maintainability, include:

Interesting metrics that static analysis can provide but aren’t generally applied include code coverage and performance metrics. Though code coverage is commonly captured by unit tests, static analysis can provide this metric and even find dead code. The performance metric is measured by identifying code that may lead to performance issues, such as inefficient loops or recursive calls. There are many other metrics around resource usage, duplication, null pointer, and divide by zero.

By taking advantage of these provided metrics, static code analysis helps developers identify potential issues early in the development cycle, improve code quality and maintainability, and ensure adherence to coding standards and best practices.

Software verification and validation is an inherent part of embedded software development, and testing is a key way to demonstrate correct software behavior. Unit testing is the verification of module design. It ensures that each software unit does what it’s required to do.

In addition, safety and security requirements may require that software units don’t behave in unexpected ways and are not susceptible to manipulation with unexpected data inputs.

In terms of the classic V model of development, unit test execution is a validation practice to ensure module design is correct. Many safety specific development standards have guidelines for what needs to be tested for unit testing. For example, ISO 61502 and related standards, have specific guidelines for testing in accordance with safety integrity level where requirements based testing and interface testing are highly recommended for all levels. Fault injection and resource usage tests are recommended at lower integrity levels and highly recommended at the highest SIL (Safety Integrity Levels) levels. Similarly, the method of driving test cases is also specified with recommended practices.

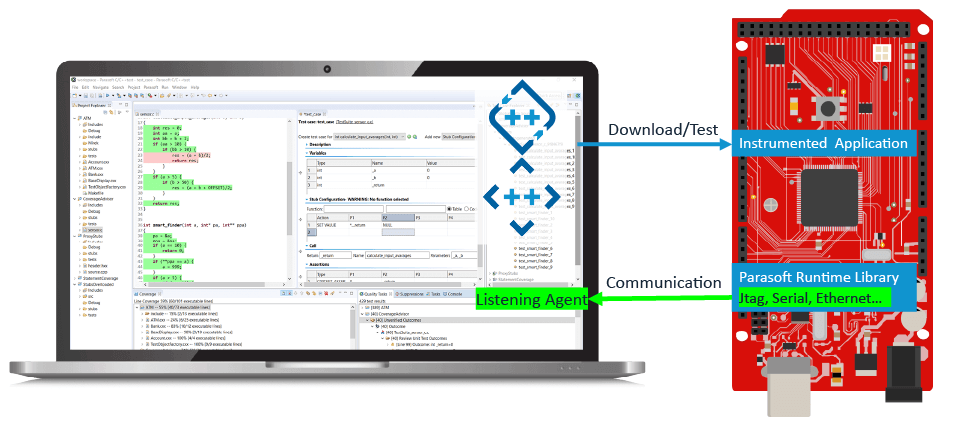

Test automation provides large benefits to embedded software. Moving away from test suites that require a lot of manual intervention means that testing can be done quicker, easier, and more often. Offloading this manual testing effort frees up time for better test coverage and other safety and quality objectives. An important requirement for automated test suite execution is being able to run these tests on both host and target environments.

Automating testing for embedded software is more challenging due to the complexity of initiating and observing tests on target hardware. Not to mention the limited access to target hardware that software teams have.

Software test automation is essential to make embedded testing workable on a continuous basis from host development system to target system. Testing embedded software is particularly time consuming. Automating the regression test suite provides considerable time and cost savings. In addition, test results and code coverage data collection from the target system are essential for validation and standards compliance.

Traceability between test cases, test results, source code, and requirements must be recorded and maintained. So, data collection is critical in test execution.

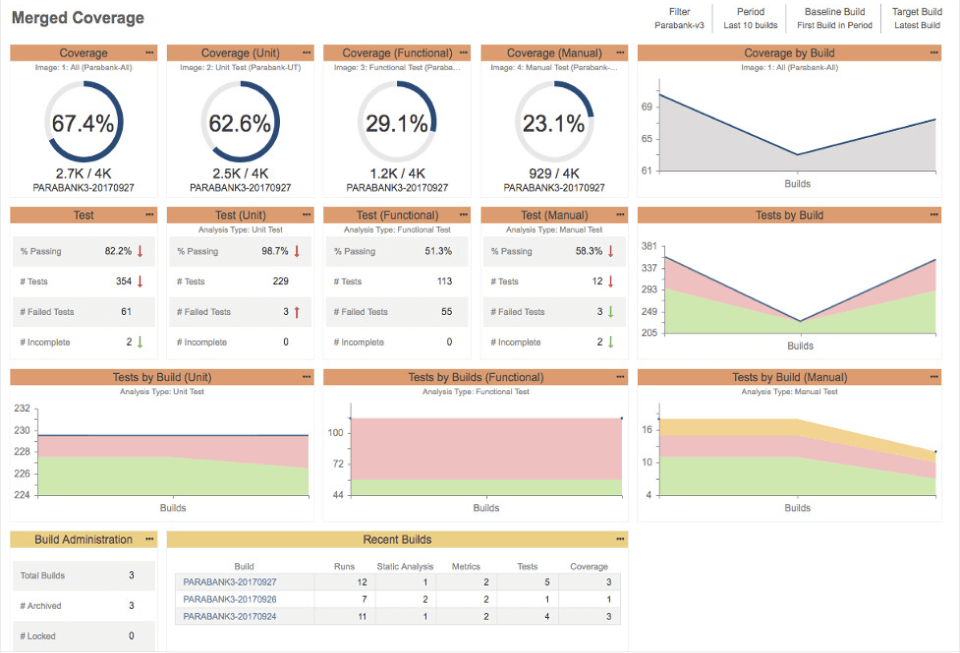

Collecting and analyzing code coverage metrics is an important aspect of safety-critical software development. Code coverage measures the completion of test cases and executed tests. It provides evidence that validation is complete, at least as specified by the software design. It also identifies dead code. This is code that can logically never be reached. It demonstrates the absence of unintended behavior. Code that isn’t covered by any test is a liability because its behavior and functionality are unknown.

The amount and extent of code coverage depends on the safety integrity level. The higher the integrity level, the higher the rigor used, and inevitably the number and complexity of test cases. Regardless of the level of coverage required, automated test case generation can increase test coverage over time.

Advanced unit test automation tools should measure these code coverage metrics. In addition, it’s necessary that this data collection works on host and target testing and accumulates test coverage history over time. This code coverage history can span unit, integration, and system testing to ensure coverage is complete and traceable at all levels of testing.

For practical purposes, automated tools should generate test cases in existing well-known formats like CppUnit. By default, one test suite per source/header file makes sense, but tools should support one test suite per function or one test suite per source file if needed.

Another important consideration is the automatic stub definitions to replace “dangerous” functions, which includes system I/O routines such as rmdir(), remove(), rename(), and so on. In addition, stubs can be automatically generated for missing function and variable definitions. User-defined stubs can be added as needed.

Although test automation tools can’t derive requirements tests from documentation, they can help make the creation of test cases, stubs, and mocks easier and more efficient. In addition, automation greatly improves test case data management and tool support for parameterized tests also reduces manual effort.

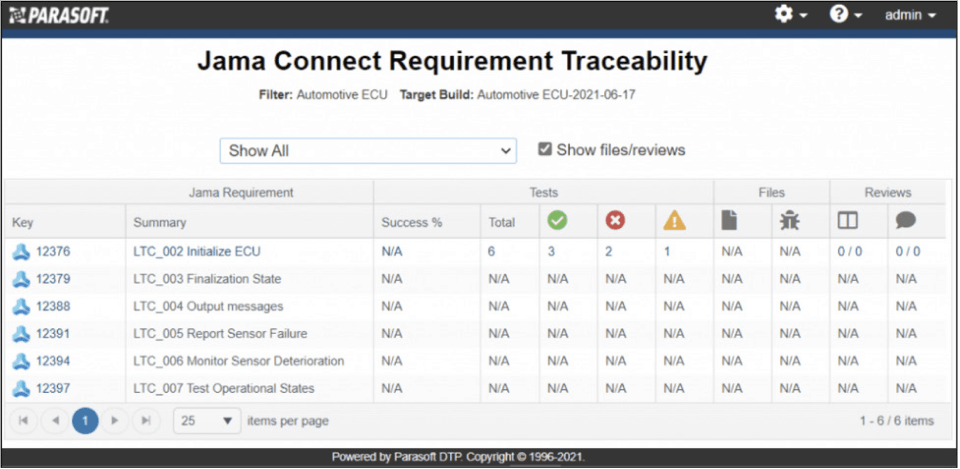

Particularly important is traceability from requirements to code to tests and test results. Manually managing traceability is nearly impossible and automation makes two traceability a reality.

While requirements are being decomposed, traceability must be maintained throughout the phases of development as customer requirements decompose into system, high-level, and low-level requirements. The coding or implementation phase realizes the low-level requirements. Consider the typical V diagram of software.

Each phase drives the subsequent phase. In turn, the work items or refined requirements in each phase must satisfy the requirements from the previous phase. Architectural requirements that have been created or decomposed from system design must satisfy the system design/requirements, and so on.

Developers write code that implements or realizes each requirement and for safety-critical applications, links for traceability to test cases and down to the code are established. Therefore, if a customer requirement changes or is removed, the team knows what it impacts down the line, all the way to the code and tests that validate the requirements.

Industry standards like DO-178B/C, ISO 26262, IEC 62304, IEC 61508, EN 50128, and others require the construction of a traceability matrix for identification of any gaps in the design and verification of requirements. This helps achieve the ultimate goal of building the right product. More than that, it’s to ensure the product has the quality, safety, and security to ensure it remains the right product.

The creation of productive unit tests has always been a challenge. Functional safety standards compliance demands high-quality software, which drives a need for test suites that affect and produce high code coverage statistics. Teams require unit test cases that help them achieve 100% code coverage. This is easier said than done. Analyzing branches in the code and trying to find reasons why certain code sections are not covered continues to steal cycles from development teams.

Unit test automation tools can be used to fill in the coverage gaps in test suites. For example, advanced static code analysis (data and control flow analysis) is used to find values for the input parameters required to execute specific lines of uncovered code.

It’s also valuable if you have automated tools that not only measure code coverage but also keep track of how much modified code is being covered by tests, because this can provide visibility into whether enough tests are being written along with changes in production code. See the following example code coverage report.

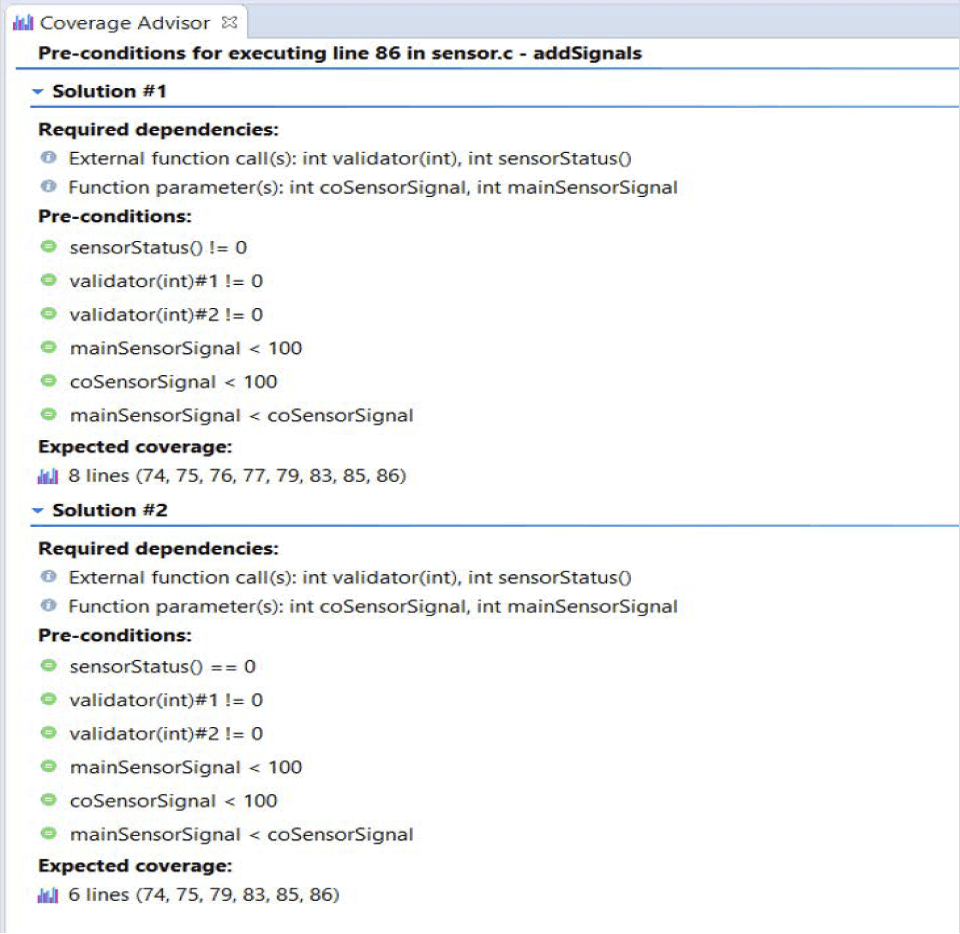

In complex code, there are always those elusive code statements of which it’s exceedingly difficult to obtain coverage. It’s likely there are multiple input values with various permutations and possible paths that make it mind twisting and time consuming to decipher. But only one combination can get you the coverage you need. Combining test automation and static analysis makes it easy to obtain coverage of those difficult to reach lines of code. An example of test preconditions calculated with code analysis is shown in the Coverage Advisor.

Another class of test are those created to induce an error condition in the unit under test. The input parameters in these cases are often out of bounds and are just at the boundary conditions for data types, such as using the highest 32-bit positive and negative integers for test data. Other examples are fuzz testing where these boundary conditions are mixed with random data designed to create an error condition or trigger a security vulnerability.

These test cases validate nonfunctional requirements since they fall outside the scope of product requirements, but are essential for determining performance, security, safety, reliability, and other product qualities. Automation is essential since these tests can be numerous (fuzz testing) and rely on repeated execution (performance testing) to help discover quality issues. Test case generation helps reduce the manual effort needed to create these test suites.

As part of most software development processes, regression testing is done after changes are made to software. These tests determine if the new changes had an impact on the existing operation of the software. Managing and executing regression tests are a large part of the effort and cost in testing. Even with automated test generation, test execution, gathering results, and re-running tests is very time consuming. Regression testing encompasses test case maintenance, code coverage improvements and traceability.

Regression tests are necessary, but they only indicate that recent code changes have not caused tests to fail. There’s no assurance that these changes will work. In addition, the nature of the changes that motivate the need to do regression testing can go beyond the current application and include changes in hardware, operating system, and operating environment.

In fact, all previously created test cases may need to be executed to ensure that no regressions exist and that a new dependable software version release is constructed. This is critical because each new software system or subsystem release is built or developed upon. If you don’t have a solid foundation the whole thing can collapse.

To prevent this, it’s important to create regression testing baselines that are an organized collection of tests and will automatically verify all outcomes. These tests are run automatically on a regular basis to verify whether code modifications change or break the functionality captured in the regression tests. If any changes are introduced, these test cases will fail to alert the team to the problem. During subsequent tests, Parasoft C++test will report tasks if it detects changes to the behavior captured in the initial test.

The key challenge with regression testing is determining what parts of an application to test. It’s common to default to executing all regression tests when there’s doubt on what impacts recent code changes have had—the all or nothing approach.

For large software projects, this becomes a huge undertaking and drags down the productivity of the team. This inability to focus testing hinders much of the benefits of iterative and continuous processes, potentially exacerbated in embedded software where test targets are a limited resource.

A couple of tasks are required here.

Test Impact Analysis (TIA) uses data collected during test runs and changes in code between builds to determine which files have changed and which specific tests touched those files. Parasoft’s analysis engine can analyze the delta between two builds and identify the subset of regression tests that need to be executed. It also understands the dependencies on the units modified to determine what ripple effect the changes have made on other units.

Due to the complexity of today’s codebases, every code change, however innocuous, can subtly impact application stability and ultimately “break the system.” These unintended consequences are impossible to discover through manual inspection, so testing is critical to mitigate the risk they represent. Unless it’s understood what needs to be re-rested, efficient testing practice can’t be achieved. If there is too much testing in each sprint or iteration, the efficiency brought by test automation is reduced. Testing too little is not an option.

This is discovered with TIA and planning testing based on a data-driven approach called change-based testing.

TIA needs a repository of already-completed tests that are already executed against each build, either as part of a fully automated test process (such as a CI-driven build step) or while testing the new functionality. This analysis provides insight into where in the code the changes occurred, how the existing tests correlate to those changes, and where testing resources need to focus. Following is an example of a TIA.

From here, the regression test plan is augmented to address failed and incomplete test cases with the highest priority and using the retest recommendations to focus scheduling of additional automated runs and prioritizing manual testing efforts.

Testing is a major bottleneck for embedded software development with too many defects being identified at the end of the release cycle due to not enough or misdirected testing. To yield the best results, focus testing efforts on the impact of the changes being made to unlock the efficiency that test automation delivers.

Met static analysis, unit testing, and code coverage requirements.

Tool qualification.

Testing is essential to embedded software development. It fosters true understanding of the code being developed and provides a solid foundation to a verification and validation regimen needed to satisfy safety and security goals for a product.

Static analysis is a crucial technique in software development that involves examining source code without executing it to identify potential issues and improve overall code quality. When teams use it from the get-go, static analysis:

Integrating static analysis into the development workflow leads to a more robust and maintainable codebase. Doing so must be done in conjunction with unit testing so as not to be seen as a damper on productivity.

The constraints on testing productivity are due to multiple factors such as the pressure and time it takes to deliver increased functionality, and the complexity and time-consuming nature of creating valuable tests.

Test data generation and management is by far the biggest challenge in unit testing and test generation. Test cases are particularly important in safety-critical software development because they must ensure functional requirements and test for unpredictable behavior, security, and safety requirements. All while satisfying test coverage criteria.

Automated test generation decreases the inefficiencies of unit testing by removing the difficulties with initialization and isolation and managing dependencies. It also removes much of the manual coding required while helping to manage the test data needed to drive verification and validation. This improves quality, safety, and security. It also reduces test time, costs, and time to market.