See what API testing solution came out on top in the GigaOm Radar Report. Get your free analyst report >>

See what API testing solution came out on top in the GigaOm Radar Report. Get your free analyst report >>

Jump to Section

Part of what separates the best software development companies from the rest is the depth of software testing they carry out on their software products. But, how do they do this? Learn in this high-level overview.

Jump to Section

Jump to Section

High-quality, safe, secure, and reliable software systems are delivered because developers, engineers, and programmers conduct rigorous software testing as part of a go-to-market strategy.

The benefits of testing are simple. Flush out defects, prevent bugs, reduce development costs, improve performance and prevent litigation. As developers of software testing, we fundamentally believe it should be baked into all phases of the software development process.

In the past, few software engineers, developers, or quality assurance engineers viewed the software testing process holistically. Traditionally, software testing has been separated from the rest of development, left to be executed near the end of the development cycle by quality assurance engineers.

If defects were found, the fixes would most likely be costly and release dates would be pushed out—tossing away company credibility and stakeholder confidence. The result was increased costs and shrinking profits.

In this high-level overview of testing, we’ll put all the pieces of the software testing puzzle together.

Testing can be defined as a process of analyzing a software item to detect the differences between existing and required conditions and to evaluate the features of the software item. In this process, we validate and verify that a software product or application does what it’s supposed to do. The system or its components are tested to ensure the software satisfies all specified requirements.

By executing the systems under development, we can identify any gaps, errors, or missing requirements in contrast with the actual requirements. No one wants the headaches of bug fixes, late deliveries, defects, or serious malfunctions resulting in damage or death.

Webinar: Why You Should Invest in Software Test Automation

The people who perform testing and develop testing processes vary greatly from one organization to another. Companies have different designations for people who test the software based on their experience and knowledge, so it depends on the process and the associated stakeholders of a project. Titles such as software quality assurance engineer and software developer are common. These are some general titles and their functions for testing.

QA engineer/software testers are responsible for flushing out defects. Many are experts with software and systems analysis, risk mitigation, and software-related issue prevention. They may have limited knowledge about the system, but they study the requirement documentation and conduct manual and automated tests. They create and execute test cases and report bugs. After development resolves the bugs, they test again.

Improve the quality, safety, and security of embedded software with automated test generation.

Software developers may know the entire system—from beginning to end. They’re involved in the design, development, and testing of systems so they know all the guidelines and requirements. Additionally, they’re highly skilled in software development, including test automation.

Project lead/managers are responsible for the entire project—product quality, delivery time, and successful completion of the development cycle. When product issues arise, it’s the product manager who prioritizes the timeframes for resolving the issues.

End users are the stakeholder or customers. Beta testing is a pre-release version of the software, which helps ensure high-quality and customer satisfaction. Who best to determine if the product being delivered is in the trajectory to satisfy its acceptance.

System engineers design and architect the system from gathered requirements and concepts. Because of the body of knowledge, they possess on the system they define system-level test cases to be later realized by the QA team and/or software developers. Verification of requirements to test cases is also performed. In highly complex systems where modeling is used, tests through model execution of the logical and/or physical system design are often performed by system engineers.

An early start to testing is best because it reduces costs as well as the time it takes to rework and produce a clean architectural design and error-free software. Every phase of the software development life cycle (SDLC) lends itself as an opportunity for testing, which is accomplished in different forms.

For example, in the SDLC, a form of testing can start during the requirements gathering phase. Requirements have to be clearly understood. Going back to the stakeholders for clarification and negotiation of requirements is a form of testing the interpretation of the stakeholder requirements to ensure the right system is built. This is a vital part of product and project management. Test cases for acceptance testing also need to be defined.

It’s important to understand that test cases defined during the system engineering phase are text-based test cases that explain what and how the system should be tested. These test cases will later be realized by the development and/or the QA team, built from the system’s engineers’ text-based test case as well as the linked requirement. The validation or execution of the realized test cases will produce the pass/fail results that provide proof of proper functionality and can also be used for any compliance needs.

The requirements decomposition and architectural design phase further detail the system at another level of abstraction. Interfaces are defined and if modeling using SysML, UML, or other language is performed, testing the architecture through simulation to flush out design flaws is another vital task.

During this process, additional requirements are defined, including the test cases that verify and validate each of them. Additional decomposition takes place producing the detailed design.

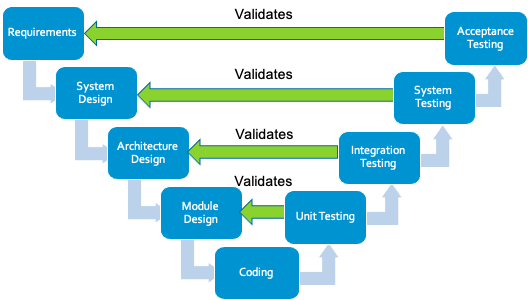

Ultimately, system-level requirements trace to system-level test cases, architectural requirements trace to integration testing test cases and the detail design requirements or low-level requirements will trace to unit test cases. Verification of requirements can start taking place to ensure that every requirement traces to a test case. A requirements traceability matrix is perfect for finding traceability gaps.

When the handoff from system to software engineers takes place, developers will begin their implementation based on requirements. Here, software developers apply or should apply coding standards to ensure code quality. Static code analysis, which is a form of testing and at the earliest stages of the implementation phase, when it’s also the cheapest to fix, will find coding defects as well as safety and security issues. Unit testing will follow, and each realized unit test case must be linked back to the low-level requirements or test case it realizes.

As the implementation of the system evolves, the test cases defined earlier during the systems engineering process must be realized and executed against the system under development. Starting with unit testing, followed by integration testing, system testing, and acceptance testing. In addition, based on quality-of-service type of requirements, other test methods may need to be performed, like API testing, performance testing, stress testing, portability testing, usability testing, and so on.

Software testing methodologies are the strategies, processes, or environments used to test. The two most widely used SDLC methodologies are Agile and waterfall, and testing is very different for these two environments.

For example, in the waterfall model, formal testing is conducted in the testing phase, which begins once the development phase is completed. The waterfall model for testing works well for small, less complex projects. However, if requirements are not clearly defined at the start, it’s extremely difficult to go back and make changes in completed phases.

The waterfall model is popular with small projects because it has fewer processes and players to tend with, which can lead to faster project completion. However, bugs are found later in development, making them more expensive to fix.

The Agile model is best suited for larger development projects. Agile testing is an incremental model where testing is performed at the end of every increment or iteration. Additionally, the whole application is tested upon completion of the project. There’s less risk in the development process with the Agile model because each team member understands what has or has not been completed. The results of development projects are typically better with Agile when there’s a strong, experienced project manager who can make quick decisions.

Other SDLC models include the iterative model and the DevOps model. In the iterative model, developers create basic versions of the software, review and improve on the application in iterations—small steps. This is a good approach for extremely large applications that need to be completed quickly. Defects can be detected earlier, which means they can be less costly to resolve.

When taking a DevOps approach to testing, or continuous testing, there’s a collaboration with operations teams through the entire product life cycle. Through this approach, development teams don’t wait to perform testing until the after successfully building software or nearing its completion. Instead, they test the software continuously during the build process.

Continuous testing uses automated testing methods like static analysis, regression testing, and code coverage solutions as part of the CI/CD software development pipeline to provide immediate feedback during the build process on any business risks that might exist. This approach detects defects earlier when they are less expensive to fix, delivering high-quality code faster.

Software testing can be performed either manually or through automation. Both approaches have their own advantages and disadvantages, and the choice between them depends on various factors, such as the project’s complexity, available resources, and testing requirements.

Manual testing puts a human in the driver’s seat. Testers run through pre-defined cases or explore the software freely, using their intuition to sniff out unexpected problems. This is ideal for usability testing, where a human perspective is crucial to assess the user interface and overall experience.

On the other hand, automated testing involves using scripts or tools to execute test cases and validate expected outcomes. Automated testing comes in handy for regression testing, where the same test cases need to be executed repeatedly after each code change or update.

Automation can save significant time and effort, especially for large and complex projects because it allows testers to run numerous test cases simultaneously and consistently.

The table below sums up the key differences between manual and automated software testing.

| Features | Manual Testing | Automated Testing |

|---|---|---|

| Test Coverage | Limited test coverage due to human constraints | Potential for high test coverage by executing numerous test cases simultaneously |

| Consistency | Prone to human errors and inconsistencies in test execution | Consistent test execution, ensuring repeatable results |

| Maintenance | Test cases and documentation need to be manually updated | Test scripts need to be updated, but can be automated to some extent |

| Initial Investment | Lower initial investment, primarily involving training testers | Higher initial investment for setting up the automation framework and writing scripts |

| Suitability for Regression Testing | Inefficient for extensive regression testing | Ideal for regression testing, allowing efficient re-execution of tests |

| Audit Trail and Reporting | Manual logging and reporting can be time-consuming | Automated logging and reporting capabilities, enabling better traceability |

The most common types of software testing include:

Static analysis involves no dynamic execution of the software under test and can detect possible defects in an early stage, before running the program. Static analysis is done during or after coding and before executing unit tests. It can be executed by a code analysis engine to automatically “walk through” the source code and detect noncomplying rules, or lexical, syntactic, and even some semantic mistakes.

Static code analysis tools assess, compile, and check for vulnerabilities and security flaws to analyze code under test. Parasoft’s static analysis tools help users manage the results of testing, including prioritizing findings, suppressing unwanted findings, and assigning findings to developers. These tools support a comprehensive set of development ecosystems to integrate into an extensive list of IDE products to conduct static analysis for C, C++, Java, C#, and VB.NET.

Learn how to choose a modern static analysis tool.

The goal of unit testing is to isolate each part of the program and show that individual parts are correct in terms of requirements and functionality.

This type of testing is performed by developers before the setup is handed over to the testing team to formally execute the test cases. Unit testing is performed by the respective developers on the individual units of source code assigned areas. The developers use test data that is different from the test data of the quality assurance team.

Using Parasoft to perform branch, statement, and MC/DC coverage is a form of unit testing. The software is isolated to each function and these individual parts are examined. The limitation of unit testing is that it cannot catch every bug in the application as it does not evaluate a thread or execution path in the application.

Automate for faster and easier testing.

Integration testing is defined as the testing of combined parts of an application to determine if they function correctly. Integration testing can be done in two ways:

System testing tests the system as a whole where it is considered a black box and there is no need to understand the inner workings of the system under test. System testing is performed once all the components are integrated, the application as a whole is tested rigorously to see that it meets requirements. This type of testing is performed by the quality assurance testing team.

This is arguably the most important type of testing, as it is conducted by the quality assurance team, which gauges whether the application meets the intended specifications and satisfies the client’s requirements. The QA team will have a set of pre-written scenarios and test cases that will be used to test the application.

More ideas will be shared about the application and more tests can be performed on it to gauge its accuracy and the reasons why the project was initiated. Acceptance tests are not only intended to point out simple spelling mistakes, cosmetic errors, or interface gaps, but also to point out any bugs in the application that will result in system crashes or major errors in the application.

By performing acceptance tests on an application, the testing team will deduce how the application will perform in production. There are also legal and contractual requirements for acceptance of the system.

See Parasoft’s automated testing solutions in action!

It’s difficult to determine when to stop testing, as testing is a never-ending process, and no one can claim that software is 100% tested. However, there are criteria to consider that can serve as indicators for putting a stop to testing.

Delivering high-quality software hinges on effective testing. To achieve this, we recommend following these best practices to create a robust testing process.

Integrate testing throughout the software development life cycle (SDLC) from the get-go (shift-left testing). This allows for early defect detection and resolution, saving time and resources later. Additionally, develop a comprehensive testing strategy that aligns with project requirements, goals, and limitations. This plan should outline the testing approach, techniques, tools, and resources needed.

Unambiguous and testable software requirements are fundamental. Clear requirements ensure everyone is on the same page and facilitate the creation of effective test cases.

Utilize test automation frameworks like C/C++test or open source tools like GoogleTest with C/C++test CT and other tools to streamline testing, minimize manual effort, and enhance test coverage and consistency.

Identify potential defects, security vulnerabilities, and coding standard violations early on using static code analysis tools.

Establish traceability between requirements, test cases, and the code. This ensures all requirements are thoroughly tested and that identified defects can be traced down to the code.

Constantly monitor and analyze testing metrics like compliance towards coding standards, test coverage, and defect trends. Leverage this information to pinpoint areas for improvement and adapt the testing strategy and processes accordingly.

Promote collaboration and open communication between developers, testers, and all stakeholders involved. Regular communication and knowledge sharing can help identify potential problems early on and ensure testing aligns with project goals and stakeholder expectations.

Parasoft helps you deliver quality software that’s safe, secure, and reliable, at scale with automated testing solutions that span every stage of the development cycle. Parasoft’s software test automation solutions provide a unified set of tools to accelerate testing by helping teams shift testing left to the early stages of development while maintaining traceability, test result record-keeping, code coverage details, report generation, and compliance documentation.

Deliver software that powers modern automobiles, aircraft, medical devices, railways, and industrial automation solutions with confidence using test automation tools.

Maximize quality, compliance, safety, and security with Parasoft intelligent software test automation.