See what API testing solution came out on top in the GigaOm Radar Report. Get your free analyst report >>

See what API testing solution came out on top in the GigaOm Radar Report. Get your free analyst report >>

Jump to Section

Ever wondered how best to make test data management (TDM) easier with test simulation? Check out how Parasoft’s virtual test data solution can help you achieve that.

Jump to Section

Jump to Section

To enable parallel integration testing that shifts left functional testing, organizations can leverage Parasoft’s approach to test data management (TDM) that uses AI, machine learning, and virtual test data to replace the need for physical endpoints and databases. Let’s explore how it works.

Validating and verifying software remains one of the most time-consuming and costly aspects of enterprise software development. The industry has accepted that testing is hard, but the root causes are often overlooked. Acquiring, storing, maintaining, and using test data for testing is a difficult task that takes too much time.

We see from industry data that up to 60% of application development and testing time can be devoted to data-related tasks, of which a large portion is test data management. Delays and budget expenditures are only one part of the problem — lack of test data also results in inadequate testing, which is a much bigger issue, inevitably resulting in defects creeping into production.

Traditional solutions on the market for TDM haven’t successfully improved the state of test data challenges — let’s take a look at some of them.

The traditional approaches either rely on making a copy of a production database, or the exact opposite, using synthetic, generated data. There are 3 main traditional approaches.

Testers can clone the production database to have something to test against. Since this is a copy of the production database, the infrastructure required also needs to be duplicated. Security and privacy compliance requires that any confidential personal information be closely guarded, so often masking is used to obfuscate this data.

A subset of the production database is a partial clone of the production database, which only includes the portion needed for testing. This approach requires less hardware but still, like the previous method, also requires data masking and similar infrastructure to the production database.

By synthesizing data, there is no reliance on customer data but the generated data is still realistic enough to be useful for testing. Synthesizing the complexity of a legacy production database is a large task, but it removes the challenges of security and privacy that are present with cloning mechanisms.

First, let’s consider the simplest (and surprisingly most common) approach to enterprise TDM and that’s cloning a production database with or without subsetting. Why is this approach so problematic?

The simplified and safer approach to test data management that we offer at Parasoft in our SOAtest, Virtualize, CTP virtual test data tools is much safer and solves these traditional problems. So how is it different from the traditional approaches?

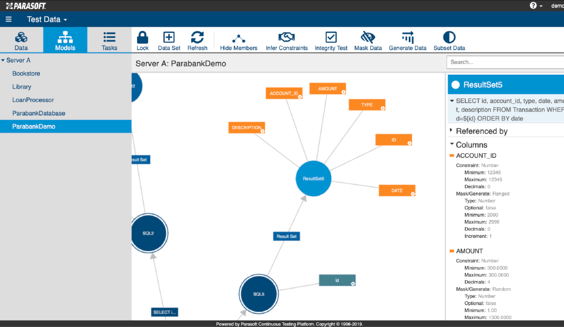

The key difference is that it collects test data by capturing traffic from API calls and JDBC/SQL transactions during testing and normal application usage. Masking is done on the captured data as necessary, and data models are generated and displayed in Parasoft’s test data management interface. The model’s metadata and data constraints can be inferred and configured within the interface, and additional masking, generation, and subsetting operations can be performed. This provides a self-service portal where multiple disposable datasets can easily be provisioned to give testers full flexibility and control of their test data, as you can see in the screenshots below:

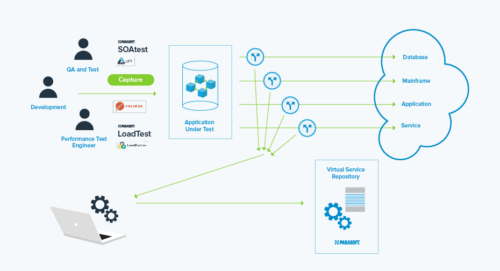

Parasoft’s virtual test data management technology is augmented by service virtualization, where constrained back-end dependencies can be simulated to unblock testing activities. A good example would be replacing a reliance on a shared physical database by swapping it with a virtualized database that simulates the JDBC/SQL transactions, allowing for parallel and independent testing that would otherwise conflict. Parasoft’s test data management engine extends the power of service virtualization by allowing testers to generate, subset, mask, and create individual customized test data for their needs.

By replacing shared dependencies such as databases, service virtualization removes the need for the infrastructure and complexity required to host the database environment. In turn, this means isolated test suites and the ability to cover extreme and corner cases. Although the virtualized dependencies are not the “real thing,” stateful actions, such as insert and update operations on a database, can be modeled within the virtual asset. See this conceptually below:

The key advantage of this approach is that it avoids the complexities and infrastructure costs of cloning databases, allowing API level testing (like integration testing) much earlier than with other test data methods.

A few other benefits to this approach include:

Testing on the physical database will still be necessary but only required towards the end of the software delivery process when the whole system is available. This approach to test data doesn’t eliminate the need for testing against the real database entirely but reduces reliance on the database in the earlier stages of the software development process to accelerate functional testing.

Traditional approaches to test data management for enterprise software rely on cloning production databases and their infrastructure, fraught with cost, privacy, and security concerns. These approaches aren’t scalable and result in wasted testing resources. Parasoft’s virtual test data solution puts the focus back on testing and on-demand reconfiguration of the test data, allowing for parallel integration testing that shifts left this critical stage of testing.