See what API testing solution came out on top in the GigaOm Radar Report. Get your free analyst report >>

See what API testing solution came out on top in the GigaOm Radar Report. Get your free analyst report >>

Jump to Section

How can you automate REST API testing with a large request payload? Read to learn more.

Jump to Section

Jump to Section

How do you get a suite of a few dozen REST API test cases, that all have very large request payloads, in a matter of seconds? Taking a scientific approach to testing helps create consistency with REST API testing automation, but even scientists need a hand every now and then.

Testers are the last line of defense between our applications and their increasingly tech-savvy audiences. If we deploy an application into the market that has defects or performance issues, our customers won’t tolerate it anymore. As a result, testers have to be smart and able to test modern applications in the most impactful ways possible. But testing is a science and requires that you take a systematic approach to validating an application.

But even with a scientific approach to testing, software testing isn’t so simple. Testers commonly go through a process such as the following:

Testing is not a simple affair, so we need all the support we can get to build these meaningful experiments that can provide meaningful feedback to ensure our applications are built correctly. And it’s important for us as testers to communicate any methods we have discovered for making testing easier, to each other! Here, one of those methods. Below I’ll explain a recent REST API testing challenge I had and share how I was able to solve the problem.

Modern web apps send RESTful JSON API calls from the browser to the server because JSON data is easy to use by JavaScript code. But creating test automation scripts with that JSON data isn’t always so easy. Recently, I encountered a testing headache because of large JSON request payloads in the service I was testing, and I was able to use Parasoft SOAtest‘s new Smart API Test Generator to help.

Unlike large request payloads, large response payloads are easy for testers to deal with. Call the service, save the response, and then compare for differences with future responses. Strip out any values that might always change, such as dates or timestamps. Rinse and repeat. However, this is all predicated on calling the service in the first place. With large request payloads, you need to configure a lot of data before making each service call, and you need to make sure it is all correct. Sure, you can copy and paste from the browser developer tools, but with many REST API calls, that means a lot of copying and pasting. That’s why now, it’s so exciting to be able to use the Smart API Test Generator.

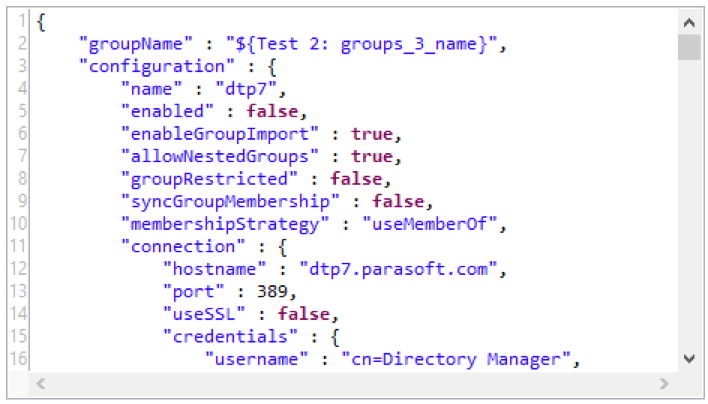

My recent project involved a web configuration page for integrating with LDAP and Active Directory servers. The concept was simple: configure the settings and then test by listing user and group accounts. The problem was that an LDAP configuration has lots of settings, and testing those settings requires sending all of them in the request payload. Furthermore, extra calls were needed to test the membership of each group. Each request ended up being hundreds of lines of JSON data.

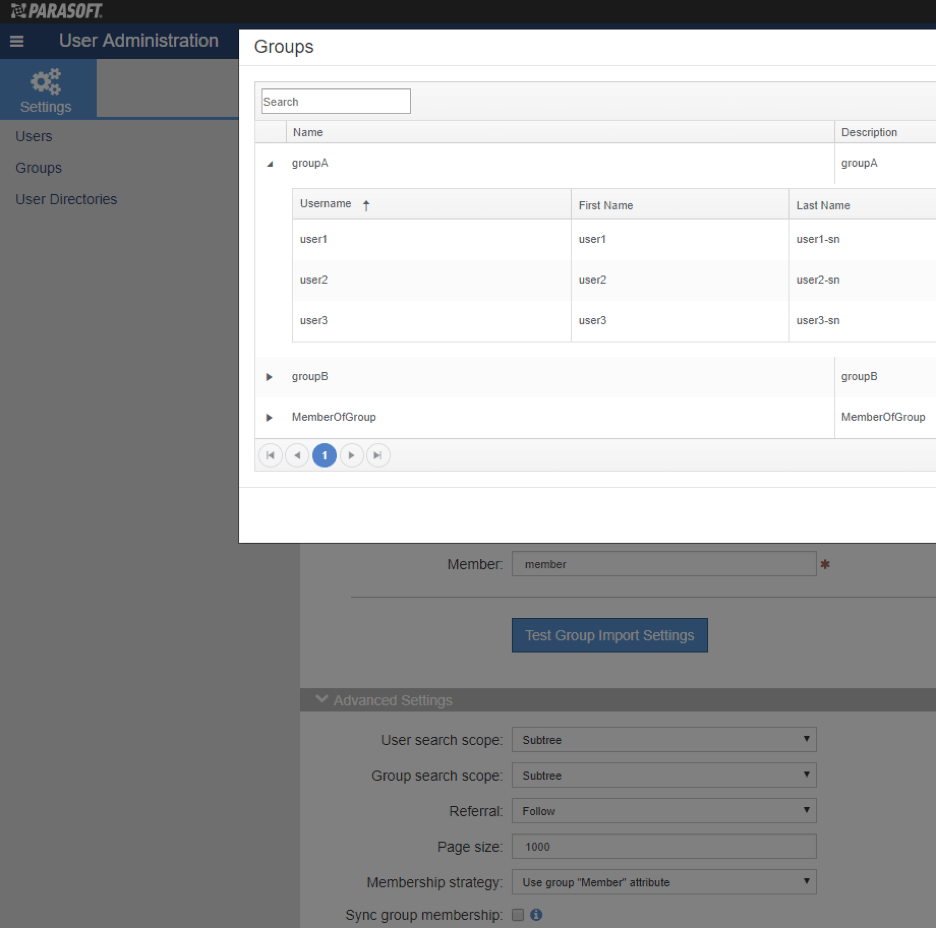

I was working on adding support for a new membership strategy. The only JSON data I cared about testing was on line 10, but all the other lines of data were still required to make the whole thing work. So, I set up my configuration page to point to an LDAP server with testing data and turned on recording using the Parasoft Recorder extension for Chrome. I clicked buttons to test users and groups, and I expanded each group to see the members. Each time I clicked, a few REST API calls were made to the web server.

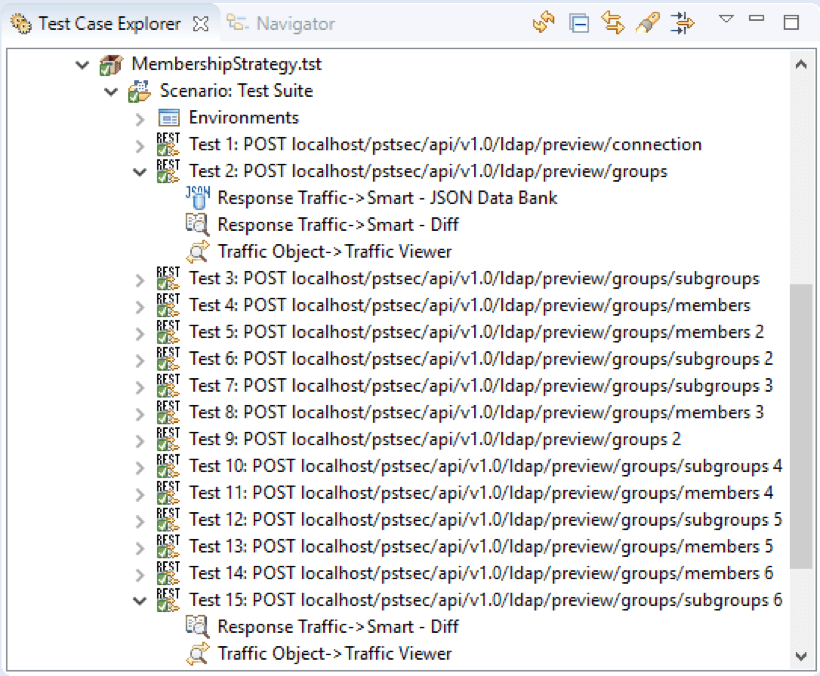

The hypothesis was that the membership strategy would affect the groups and members in the preview. I changed the membership strategy on the configuration page and clicked through the test data again. Visually, I could see different group membership results in the dialog. I was satisfied with my manual test, so I stopped recording and generated a very smart set of API tests:

And there it was — within seconds I had a suite of a few dozen REST API tests that all had very large request payloads. Only a few properties like group name and membership strategy changed between the requests, but it was enough to get a variation in responses and save a diff control for each one. It was even smart enough to extract the group names from the first group preview response and data bank them for parameterized use in the following tests. Seeing all the tests pass gave me confidence that my new membership strategy feature was working correctly.

This was all done using an LDAP server with test data instead of real user accounts. I can ensure that the test data will not change, but real users come and go over time. Changing data can create a lot of noise in automated test regression controls. If you don’t have stable test data for your application, I suggest that you check out web service or database virtualization offered by Parasoft Virtualize.

As I discussed at the beginning of this post, taking a scientific approach to testing helps create consistency. But even the best scientists need a hand every now and then! The technique I described above is like using a high-powered microscope instead of a magnifying glass. It’s a significant leap forward in what is otherwise a very complicated process and, at least for me, helped significantly expedite my testing challenge. I hope that it does the same for you. Happy testing!