See what API testing solution came out on top in the GigaOm Radar Report. Get your free analyst report >>

See what API testing solution came out on top in the GigaOm Radar Report. Get your free analyst report >>

Jump to Section

Implementing and maintaining the right coding standard for your dev projects is key to delivering secure software solutions. In this post, our expert discusses why you should ensure that you maintain the right coding standard and how you can do this.

Jump to Section

Jump to Section

Software moved from the desktop to just about everything we touch. From smart thermostats to infusion pumps to cars, the software is pervasive and growing. The so-called “things” from the Internet of things (IoT) increasingly carry more logic. With it, a larger risk of failure. Many of these devices are used in safety-critical areas such as medical and automotive where they have a potential for bodily harm.

Most companies that build devices rightly view current software development as an almost insane group of cowboys and chaos. But there is hope. Software CAN and MUST be treated as an engineering practice. Coding standards, which are part and parcel of good software engineering practice, move us from the “build, fail, fix” cycle to a “design, build, deliver” cycle with high quality, safety, and security.

As it turns out, these same standards also provide benefits in the areas of cybersecurity, doing double duty. This post discusses:

Software’s impact on the real world is often discounted. One of my main themes that I discuss continuously as an evangelist at Parasoft, is that software development really should be engineering.

We frequently call software developers by the title software engineers, but that’s not necessarily the proper term for how they’re working today. Evolving into a good software engineering practice results in costs going down and quality going up. A key part of this is adopting standards—in particular, coding standards.

The age of software-defined vehicles, medical and industrial IoT, and perpetually connected devices is here. Software is creeping into products, devices, and other places we never thought of. We now must think hard about the software in these products and the ramifications of it.

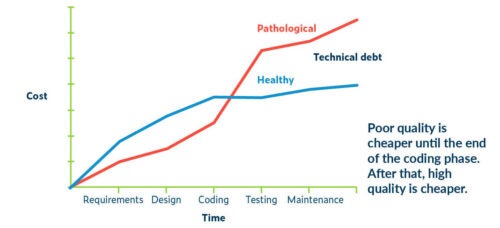

One of the interesting things that I try to explain is that building good software is different from building something like a car. If I’m trying to build a high-quality car, I must spend more on materials and more time to build it. It turns out that in software, you don’t spend more to build high quality software. You spend more to build poor quality software.

We must understand that in software, most of the defects come from the programmer, who puts them in the product. If we can stop introducing defects as we develop the software, we can have much better software, at a lower price.

This quote here is from a talk back in 1972, called “The Humble Programmer,” written by Edsger W Dijkstra. It’s still very relevant today.

It’s important to realize how quality impacts software development costs. Capers Jones, a researcher, has been following this for decades and performs a survey every year on software costs. The numbers don’t change much year to year. The data shows that the typical cost of software from requirements to coding to maintenance goes up with each phase. However, the way teams approach quality determines whether their process is healthy or “pathological.”

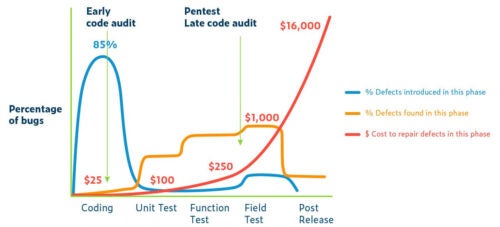

It makes sense that if we find a defect right as the code is written, the cost is relatively inexpensive—a few minutes of a developer’s time, for example. If it’s possible to eliminate 85% of the defects in the development phase, there’s a big impact on cost. Consider the now-famous graph from Capers Jones showing the average cost of fixing defects at each stage of development:

Fixing defects post-release costs about $16,000 (possibly much more) based on research into real companies doing real software, not a theoretical model. If we look at the late cycle quality and security efforts such as penetration testing, the security issues found here are at the expensive end of the cycle. It’s probably 15 times as expensive as finding vulnerabilities through testing versus early security audits.

An outdated and provably false approach is to improve the quality of your software by testing it at the end of the lifecycle, just before release. In the manufacturing world, they understand this is impossible, but for some reason, we think we can test quality and security “into” software.

The reasons why I say that software development is almost never engineering are these common characteristics of current software development:

The goal of software coding standards is to instill proven programming practices that lead to safe, secure, reliable, testable, and maintainable code. Typically, this means avoiding known unsafe coding practices or code that can cause unpredictable behavior. This becomes critical in programming languages like C and C++ where the potential to write insecure or unsafe code is high.

However, I think the industry has lost its way with these programming standards. In the last decade, the tools (such as static analysis tools) have shifted from detecting potentially problematic code that’s unsafe or a known language weakness into a focus on looking for defects as a form of early testing, also known as shifting left.

Although looking for defects is important, building sound software is a more productive activity. What we should be doing is building and enforcing standards to avoid the situation where defects are introduced in the first place—shifting even farther left.

To back this up, consider the research done by the Software Engineering Institute (SEI) where they found, unsurprisingly, that security and reliability go hand-in-hand and that security of software can be predicted by the number and types of quality defects found. In addition, critical defects are often coding mistakes that can be prevented through inspections and tools such as static analysis.

This post doesn’t go into details on each of these coding standards but there is a considerable body of work in the following industry standards. Although their application might be specific to a specific type, these standards are being adopted in many industries. Here are some examples of established coding standards for safety and security.

Let’s consider MISRA C, which I mentioned isn’t just for automobile applications. However, it’s a standard that’s been in use since 1998 and is well-defined. It’s updated every couple of years they go through it. As C and C++ languages evolve, they evolve the standards around it. It’s a very flexible standard that takes into account different severity levels and there’s a documented strategy for handling and documenting deviations.

As the technology that can detect violations of coding standard guidelines, such as static analysis, recent versions of MISRA take into account what guidelines are decidable (detectable with high precision by tools) and those which aren’t. This brings us to the topic of adoption and enforcement and the importance of static analysis tools in coding standards.

Research shows that inadequate defect removal is the main cause of poor quality software. Programmers are about 35% efficient in finding defects in their own software. Later in the development cycle, the most defects we can hope to remove after all the design reviews, peer reviews, unit tests, and functional tests, is about 75%.

Static analysis, when used properly in a preventative mode, can increase defect removal to about 85%. However, the focus of static analysis usage for most organizations today is on detection and the quick fix.

Further benefits are possible when static analysis tools are used to prevent known poor programming practices and language features in the first place. This is where coding standards come into play as guidelines and programming language subsets that prevent common defects like buffer overflows or missing initialization from being written into code.

Consider an example where during testing a rather complex buffer overflow error is detected, perhaps with a dynamic application security tool (DAST). This is a stroke of luck since your testing just happened to execute the code path that contains the error. Once detected and debugged, it needs to be retested, and so on. Static analysis using flow analysis might have also found this error, but it depends on the complexity of the application.

Runtime error detection is precise but it only checks the lines of code that you execute. So, it’s only as good as your test code coverage. Consider if a coding standard had prohibited the code that enabled this error in the first place.

Coding standards like MISRA C don’t describe how to detect uninitialized memory, for example, but rather guide programmers to write code that won’t lead to such an error in the first place. I believe this is much more of an engineering approach: Program according to well-known and accepted standards. Take, for example, civil engineering and building bridges.

We wouldn’t take the approach of building a bridge and then test it by driving bigger and bigger trucks over it until it collapsed, measuring the weight of the last successful truck, and building it again to withstand that new weight. This approach would be foolish yet, it’s not unlike the way we approach software development.

Once a software team adopts a coding standard and static analysis is applied properly, they can detect errors early and prevent them. In other words, instead of finding a defect early, which is good, the team is changing the way they write code, which is better!

Consider the Heartbleed vulnerability. There are now detectors for this specific instance of vulnerability, but there’s a way to write code so that Heartbleed could never have happened in the first place. Prevention is a better, safer method.

Dykstra said, “Those who want really reliable software will discover that they must find means of avoiding the majority of bugs to start with.” Having a solid prevention methodology is less than the cost of fixing these bugs.

Coding standards embody sound engineering principles for programming in their respective languages and form the basis of any preventative approach. The cost of good software is less than the cost of bad software. If you’re not using static analysis today, or you’re only using it for early detection, take a look at Parasoft’s static analysis tools for C and C++, Java, and C# and VB.NET with a rich library of checkers for all the popular safety and security standards built in.